Editor's Note: This post has been republished from MarketMuse's website. MarketMuse is a Marketing AI Institute partner.

Back in September 2020 when I first wrote about comparing MarketMuse First Draft with GPT-3 there weren’t many examples from which to draw. GPT-3 was still closed to the general public. Fast forward to 2021 and numerous startups are using GPT-3’s API to power their platforms.

Which made me curious. How does MarketMuse’s proprietary natural language model known as First Draft compare against GPT-3? My experience the first time around wasn’t that great. The sample GPT-3 output I saw at the time left much to be desired. In hindsight, I shouldn’t have expected much. Typing in a topic title and expecting a decent piece of long-form content in return is quite a stretch. It still is.

This time around I took a different approach.

Enter Snazzy.AI, a GPT-3 powered app that combines its own machine learning layer. Snazzy has a number of templates geared towards short-form content such as Google Ads, Facebook Ads, landing pages, and product descriptions.

New to its platform is what it calls its Content Expander, which “expands a single sentence or bullet points into a complete thought.” That didn’t quite sound like long-form content to me, but I thought it was worth a shot.

So here’s what I did.

Setting up The NLG Experiment

The way Snazzy’s text expander works is you provide it with a topic and a summary, along with some additional information, and it will generate text output. Exactly how long the output is seems arbitrary.

For that additional information, I provided Snazzy with the 10 most important topics from MarketMuse topic model for it to use as “Branded Keywords.”

The topic, by the way, is “glucagon as a non-invasive diabetic treatment.” Heavy stuff, for sure!

For this experiment, I used the output from MarketMuse First Draft. Keep in mind this is already a well-structured and topically rich piece of content created through natural language generation.

I entered in the first subsection heading along with the first paragraph as priming material for the GPT-3 engine. I repeated this process for each additional subsection in the article, essentially stitching the generation together, section by section.

The Results

| Content Score | Word Count | |

| MarketMuse First Draft | 31 | 1,760 |

| GPT-3 (Snazzy) | 26 | 953 |

The results were quite good all around. Normally, MarketMuse First Draft output hits the Target Content Score. But in this case, I kept the same older output used in my previous comparison post, while the topic model (from which Target Content Score is derived) is the most current.

The GPT-3 output performed well, with just a Content Score of 20% less than MarketMuse First Draft. It also accomplished this with far fewer words.

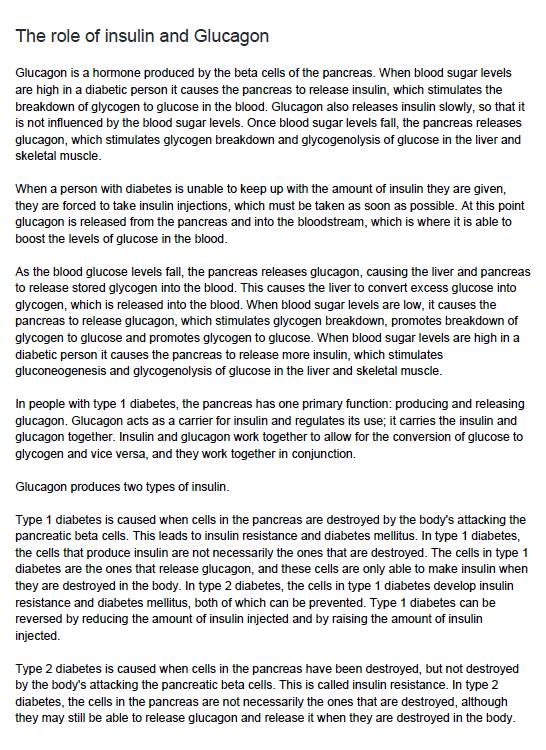

MarketMuse First Draft

Here’s a snippet of MarketMuse First Draft.

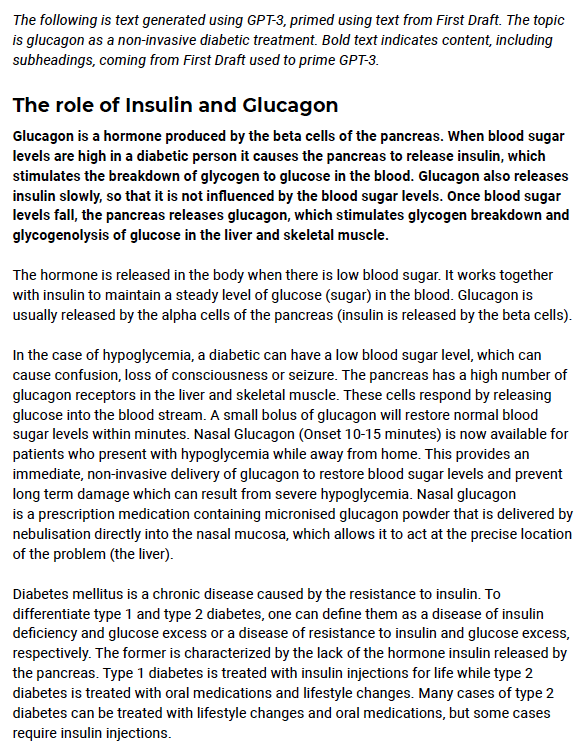

GPT-3 (Snazzy) With MarketMuse First Draft

Here’s part of the NLG output from Snazzy including the subheadings and text used to prime the GPT-3 generation.

Conclusion

The GPT-3 output from Snazzy is quite impressive and nicely complements that of MarketMuse First Draft. Overall, it reads well.

Upon refection, the resulting content is really a collaboration between two NLG platforms, MarketMuse First Draft and GPT-3. MarketMuse First Draft provided 25% of the initial content as a primer for GPT-3 while it generated the balance. While that may seem excessive, I suspect that GPT-3’s model needs a sufficient amount of existing content in order to set the direction.

Certainly, generating long-form content is a different beast than, say, creating a Facebook ad, for a number of reasons.

Lastly, keep in mind that no editing was done. Also, I didn’t run multiple generations through Snazzy, I just ran one instance for each section and took the raw output.

Stephen Jeske

Stephen leads the content strategy blog for MarketMuse, an AI-powered Content Intelligence and Strategy Platform.