Marketers have an AI problem.

Marketers are beginning to understand AI’s promise: some machine learning technologies can help predict and prescribe marketing actions at scale, based only on your customer and user data. AI systems exist that can help you make decisions that reduce churn and take action on customer needs.

But what happens when you need to explain the actions an AI system took to your boss?

Explainability is critical to understanding why an AI system did what it did. Without knowing why an AI system recommends what it does, the business use case for AI in marketing becomes limited.

For example, if a subscriber is predicted to churn, but you don’t know why, how do you know what preventative action to take? Multiply this across the entire customer lifecycle and millions of customers in a digital 5G world, and you have a critical and expanding problem—lack of contextual insight and actionability at scale.

Traditional methods for making predictions are explainable, but they don’t have the scale and power of machine learning powered marketing systems. And traditional marketing analytics approaches don’t scale for today’s needs, either.

Marketing measurement today is primarily one-dimensional. Marketing analytics teams still run mostly statistical approaches for modeling and segmentation to drive periodic campaigns and track one-dimensional measurements such as response, click-throughs, purchases, etc.

These traditional metrics, although useful for assessing campaign performance over time, are slow and static, offering surface-level information. It’s tip of the iceberg stuff that makes it difficult to see the real drivers of customer behavior at a granular, yet consumable, level.

Consumer insights are shallow and outdated. Drilling down into customer behavior specifically is cumbersome. Usually this is done through the lens of segmentation, which is mainly descriptive in nature, static, and out of date. This type of analysis reveals interesting findings, but not dynamic, contextually relevant insights or predictive, immediately actionable insights that keep up with customers’ daily interactions with your business.

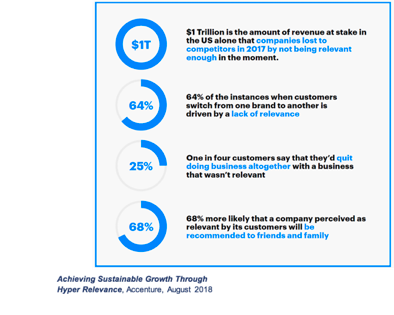

This is a big problem in a world where relevance is expected by customers every time they interact with you. Customers aren’t static, and your segmentation architecture shouldn’t be either.

Privacy and data usage creates more restraints. Privacy and data use issues are becoming more important as companies collect more data, consumers generate more of it, and governments move to regulate how data is shared. Models now need not just perform well, but also should be easily able to be audited and tracked so they’re compliant with legislative and ethical considerations. We’re seeing this formalized in Europe’s GDPR rules, and will likely see more of it in the near future.

Machine learning is a major transformational reality for marketers. Like digital channels for frictionless sales and service, if your competitors adopt it successfully and you don’t, they can leap frog you in terms of speed, efficiency and precision.

However, everyone suffers ultimately from the issue of AI’s “black box” problem, which is curtailing the adoption of AI in marketing.

Although machine learning algorithms are highly precise, if they can’t tell you why someone is likely to respond, purchase, be a high-value customer, cost money or churn, then it’s impossible to have insights that are contextually relevant.

In its report “Achieving Sustainable Growth through Hyper Relevance,” Accenture reports on the huge advantage relevant marketers achieve for their companies.

Purely analytic driven digital businesses have an advantage. But if you are a large brand with traditional channels, there is so much data to consolidate and streamline, it requires AI-enabled methods to making sense of it all at speed and scale.

However, there are many challenges when trying to get more explainability from AI, depending on which type you use.

Neural networks have one set of issues, while decision trees and gradient boosting models have another. In a recent blog post, I explore a number of the challenges and the way similarity, as a method, opens up the opportunity for marketers to take advantage of AI’s scale and precision while generating the critical insights marketers not only need, but lack, with traditional methods.

The Power of Explainable AI

Imagine in today’s fast-moving environment and numerous digital customer interactions that you could update dynamically, machine-driven customer segments that reveal the primary features associated with every predicted buyer’s purchase behavior every day.

Now imagine that you could use those machine-driven insights—the primary drivers of predicted purchase behavior—to match a relevant offer to a customer, either outbound or inbound, automatically.

Imagine that you could see new segments that have predictive characteristics in common you didn’t see before. A group of customers, for example, whose recent online searches yielded in store purchases within 48 hours. What if you could find customers that looked like those instantly and ensure they received ads when searching online that promoted the nearest store location?

With AI, the speed is there at high volume. With fully explainable AI, the speed and contextual insights to drive relevancy is there. And when combined with other flexible media platforms, DSP’s and creative advertising technologies, it is possible to become more state of the art and fast. Exploring, testing and taking measurable action steps is vital in today’s hyper-relevant world.

Back to the issue on ethical uses of data.

We have seen tremendous levels of consternation regarding data privacy particularly through all of the headlines regarding Facebook. But beyond that we have seen Amazon abandon its AI recruiting models for lack of explainability due to gender bias. We have seen the Federal Reserve Board of Governors call for AI explainability to ensure that consumers ultimately have access to credit, without bias, while also having a right to an explanation for adverse decisions.

Even when marketers exclude “sensitive” variables from models, an algorithm may simply replace those variables with other proxy variables that have the same effect. Explainability offsets this concern by enabling regular auditable reviews of machine learning predictions and what factors are driving them.

Leading Marketers will Increasing Deploy Explainable AI Applications

If you are a marketer, looking to explainable AI (XAI) applications makes a lot of sense for the reasons explored here.

Of course, there are applications where explainability is not as critical and is often used already without much notice. Programmatic ad buying is an example of this or very simple ad targeting decision systems.

But those tend to be prospect-oriented marketing activities. When it comes to customers, there are many reasons to carefully research and try XAI technologies and applications. It may give you the competitive advantage you need to compete with relevancy, while maintaining the ethical data use and privacy practices in the most economical way

Most importantly, it may help you keep your most valuable customers away from your competitors.

Dave Irwin

Dave Irwin is the CMO of simMachines, a machine learning software company based in Chicago. Dave is a 25-year industry veteran with expertise in analytic driven marketing spanning traditional CRM, digital and addressable TV and online video.